Lip Syncing Utility

As it presently stands, lip syncing is one of the most tedious, time-consuming tasks for animators, and must be manually redone for every language translation of a game, requiring extra time and budget. For an advanced animation programming course, I sought to streamline the process of lip syncing by creating a system in Unity for automatically syncing mouth shapes (phonemes) to audio, based on a user-provided text transcription.

|

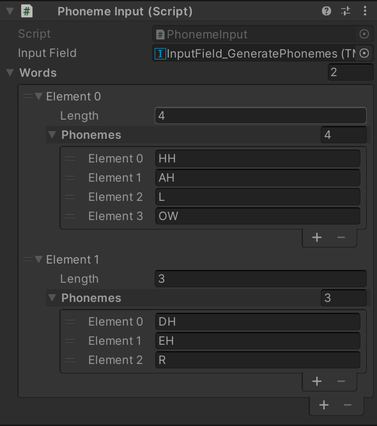

Through the Python for Unity API, the program interfaces with the CMU Pronouncing Dictionary to retrieve phonetic data for the words making up the input transcription. This data is subsequently sent to a C# script and sorted into a custom data structure that contains phonetic information for each word, and can be accessed later.

After retrieving and converting the phonetic information to a form that Unity can understand, the challenge becomes matching the phonemes to the timing of the original input audio. Assuming a clean audio recording, the start of each word can be identified based on pauses in the audio waveform. The duration of the word can assumed to be the time until the next pause, with the phonemes of said word distributed inside that duration. Now knowing the start time and duration of each phoneme, it becomes possible to feed this information to the animation system with the weight of facial phoneme blendshapes adjusted based on the correspondence between audio playback time and phoneme start time. Simultaneously, the rotation of the jawbone can be adjusted based on the amplitude of the audio, to give the effect of volume variation.

|